Apple to start scanning iPhones for child sex abuse images

Apple has announced that beginning with the upcoming iOS version, it will scan iPhones for Child Sexual Abuse Material (CSAM). If known CSAM images are detected in iCloud Photos, they would be reviewed manually, and if necessary, reported to National Center for Missing & Exploited Children (NCMEC).

Tech giant Apple has announced plans to scan images stored in iPhones for child abuse in order to help law enforcement identify child abusers. According to the company, the system will use a database of known CSAM image hashes to match photos on devices before they are uploaded onto the iCloud. This means that Apple will not be able to view actual images on your device unless they match a known CSAM image hash. The CSAM database is provided by the NCMEC and other organizations dealing with child abuse.

“Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users’ devices,” Apple’s announcement of new child protection features states.

If a match is found, the image would be manually reviewed by a human to confirm that it’s a match. The account belonging to the person on whose iPhone the image was found would then be disabled, and a report to the NCMEC would be sent. Users with flagged accounts will have the option to appeal to get them reinstated.

It should be mentioned that Apple already checks the iCloud for any known child abuse images, as do most cloud providers. However, the new system would allow Apple access to local storage. Apple is careful to emphasize that users’ privacy will be protected as Apple will only learn about users’ photos when there’s CSAM imagery on the account.

The announcement has already raised concerns among consumers, with many questioning whether regular photos of their children would be flagged as potential child abuse imagery. However, it should be emphasized that only known child abuse images in the CSAM database will be flagged. Other concerns were also raised, with some users believing that such a system could be misused by authoritarian governments and law enforcement agencies to scan for prohibited content and/or for political speech and criticism, as well as spy on citizens.

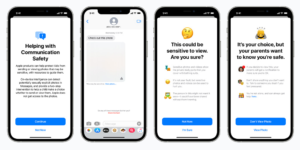

Apple will start scanning for CSAM images starting with iOS 15 and iPadOS 15, which will be released later this year. In addition to this, Apple also introduced a new Communication Safety feature for the Messages app that will warn children and parents when a sexually explicit photo is sent or received.

Communication Safety will be an opt-in feature limited to children and will have to be enabled by parents through the Family Sharing feature. If the feature is enabled, sexually explicit images will be blurred and children will be warned that if they open it, they may be exposed to sensitive content. If the image is opened, parents would receive a notification. Children will also be warned when trying to send a sexually explicit image, and parents would be informed about the image being sent.

According to Apple, the company does not get access to these images. Rather, the Messages app uses on-device machine learning for analyzing image attachments and determining whether they are sexually explicit.

Site Disclaimer

WiperSoft.com is not sponsored, affiliated, linked to or owned by malware developers or distributors that are referred to in this article. The article does NOT endorse or promote malicious programs. The intention behind it is to present useful information that will help users to detect and eliminate malware from their computer by using WiperSoft and/or the manual removal guide.

The article should only be used for educational purposes. If you follow the instructions provided in the article, you agree to be bound by this disclaimer. We do not guarantee that the article will aid you in completely removing the malware from your PC. Malicious programs are constantly developing, which is why it is not always easy or possible to clean the computer by using only the manual removal guide.